Basic Three Layer Neural Network in Python

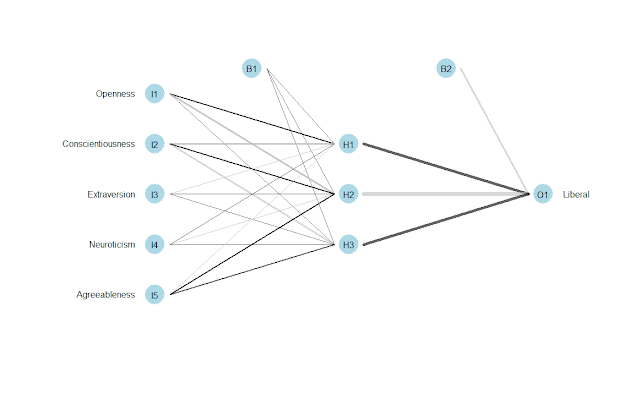

Introduction As part of understanding neural networks I was reading Make Your Own Neural Network by Tariq Rashid. The book itself can be painful to work through, as it is written for a novice, not just in algorithms and data analysis, but also in programming. Although the code is a verbatim transcription from the text (see Source section), I published it to better understand how neural networks are designed, made easy by the use of a Jupyter Notebook, not to present this as my own work, although I do hope that this helps others develop their talents with data analytics. Github Source: AzureNotebooks/Basic Three Layer Neural Network in Python.ipynb at master · JamesIgoe/AzureNotebooks (github.com) Overview The code itself develops as follows: Constructor set number of nodes in each input, hidden, output layer link weight matrices, wih and who weights inside the arrays are w_i_j, where link is from node i to node j in the next layer set learning rate activat...