The IQ ~ Religion Matters Vector

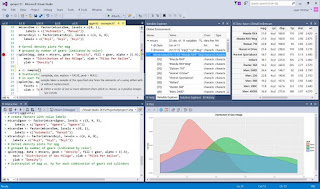

I was reminded this morning by an article, New Study: Religious People Are Less Smart but Atheists Are Psychopaths . but realized that there are many studies showing the religious to be less intelligent, but rarely is this ever published. It would 'bite' most of the population, liberal and conservative alike. Note : I plan on redoing this with more solid numbers, and possibly different measures of religiosity. Example Code # Correlations on ReligionMatters and Average IQ oecdData <- read.table("OECD - Quality of Life.csv", header = TRUE, sep = ",") iqVector <- oecdData$IQ religionMattersVector <- oecdData$ReligionMatters cor.test(iqVector, religionMattersVector) lm1 <- lm(iqVector ~ religionMattersVector) summary(lm1) # Plot the chart. plot(iqVector, religionMattersVector, col = "blue", main = "IQ ~ Religion Matters Vector" , abline(lm(religionMattersVector ~ iqVector),...